Agence: a dynamic film exploring multi-agent systems and human agency

Agence is a dynamic and interactive film authored by three parties: 1) the director, who establishes the narrative structure and environment, 2) intelligent agents, using reinforcement learning or scripted (hierarchical state machines) AI, and 3) the viewer, who can interact with the system to affect the simulation. We trained RL agents in a multi-agent fashion to control some (or all, based on user choice) of the agents in the film. You can download the game at the Agence website.

GANterpretations

GANterpretations is an idea I published in this paper, which was accepted to the 4th Workshop on Machine Learning for Creativity and Design at NeurIPS 2020. The code is available here.

At a high level what it does is use the spectrogram of a piece of audio (from a video, for example) to “draw” a path in the latent space of a BigGAN.

The following video walks through the process:

GANs

GANs are generative models trained to reproduce images from a given dataset. The way GANs work is they are trained to learn a latent space $ Z\in\mathbb{R}^d $, where each point $ z\in Z $ generates a unique image $ G(z) $, where $ G $ is the generator of the GAN. When trained properly, these latent spaces are learned in a structured manner, where nearby points generate similar images.

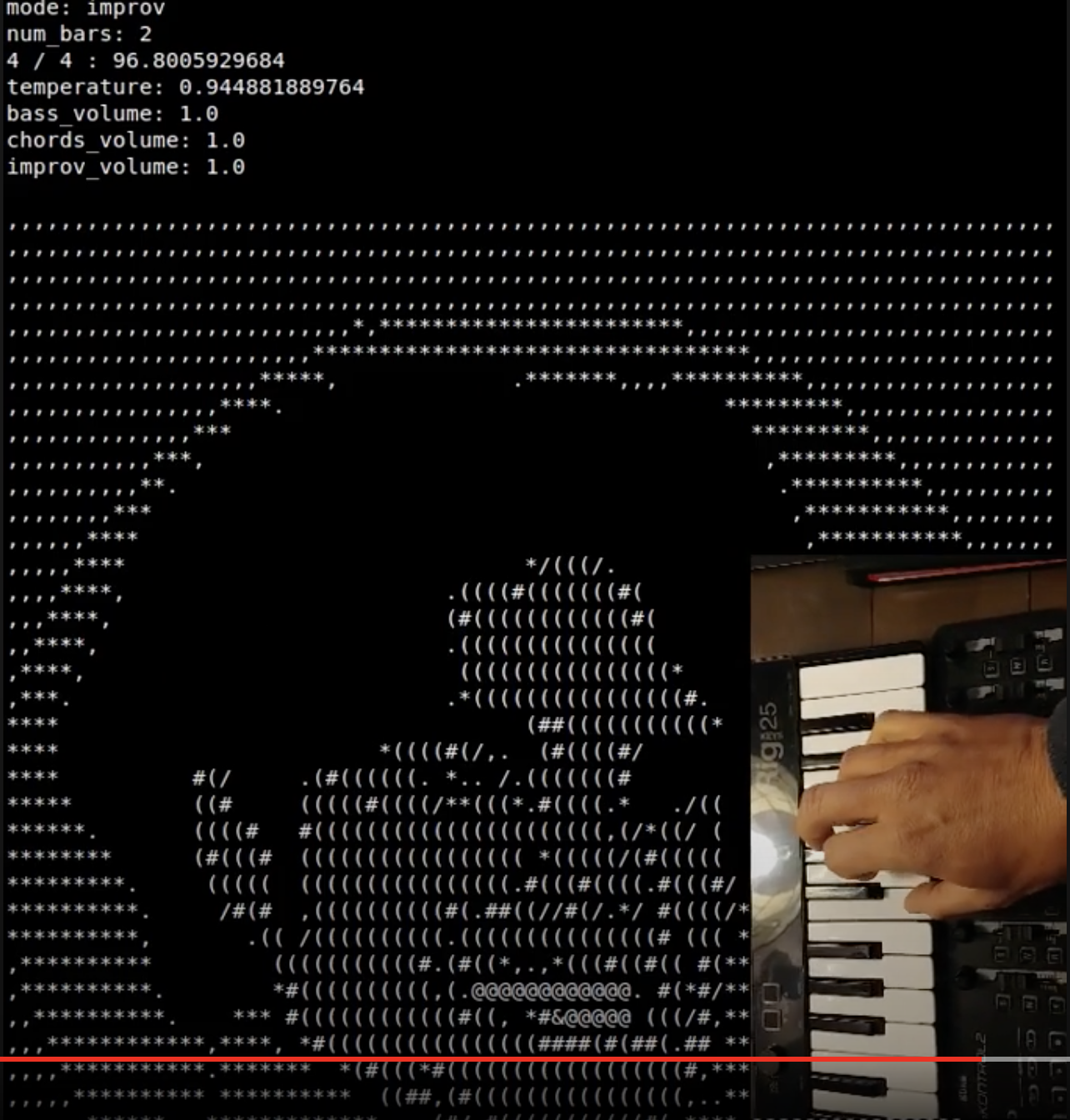

ML-Jam: Performing Structured Improvisations with Pre-trained Models

This paper, published in the International Conference on Computational Creativity, 2019, explores using pre-trained musical generative models in a collaborative setting for improvisation.

You can read more details about it in this blog post.

You can also play with it in this web app!

If you want to play with the code, it is here.

Demos

Demo video playing with the web app:

Demo video jamming over Herbie Hancock’s Chameleon:

Demo video over free improvisation: