The Difficulty of Passive Learning in Deep Reinforcement Learning

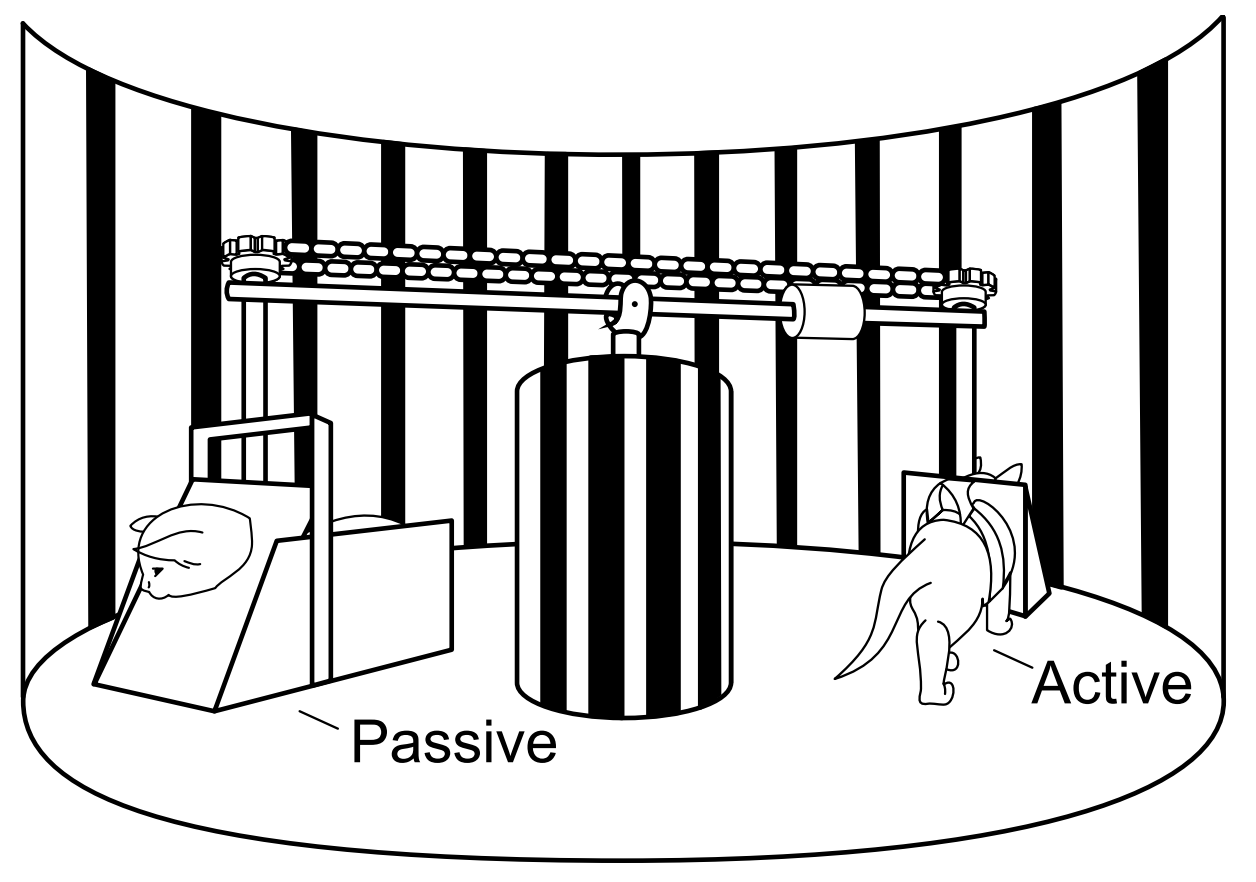

We propose the “tandem learning” experimental design, where two RL agents are learning from identical data streams, but only one interacts with the environment to collect the data. We use this experiment design to study the empirical challenges of offline reinforcement learning.

Georg Ostrovski, Pablo Samuel Castro, Will Dabney

This blogpost is a summary of our NeurIPS 2021 paper. We provide two Tandem RL implementations: this one based on the DQN Zoo, and this one based on the Dopamine library.

MICo: Learning improved representations via sampling-based state similarity for Markov decision processes

We present a new behavioural distance over the state space of a Markov decision process, and demonstrate the use of this distance as an effective means of shaping the learnt representations of deep reinforcement learning agents.

Pablo Samuel Castro*, Tyler Kastner*, Prakash Panangaden, and Mark Rowland

This blogpost is a summary of our NeurIPS 2021 paper. The code is available here.

The following figure gives a nice summary of the empirical gains our new loss provides, yielding an improvement on all of the Dopamine agents (left), as well as over Soft Actor-Critic and the DBC algorithm of Zhang et al., ICLR 2021 (right). In both cases we are reporting the Interquantile Mean as introduced in our Statistical Precipice NeurIPS'21 paper.

Losses, Dissonances, and Distortions

Exploiting the creative possibilities of the numerical signals obtained during the training of a machine learning model.

I will be presenting this paper at the 5th Machine Learning for Creativity and Design Workshop at NeurIPS 2021.

The code is available here.

You can see an “expainody” video here:

Introduction

In recent years, there has been a growing interest in using machine learning models for creative purposes. In most cases, this is with the use of large generative models which, as their name implies, can generate high-quality and realistic outputs in music, images, text, and others. The standard approach for artistic creation using these models is to take a pre-trained model (or set of models) and use them for producing output. The artist directs the model’s generation by ``navigating’’ the latent space, fine-tuning the trained parameters, or using the model’s output to steer another generative process (e.g. two examples).

Introducing MUSICODE Phase 2

A new phase for a musical ode to musical code. More focused on performance and coding, more geared towards people with a technical background.

Subscribe to the YouTube channel!.

You can find the code I use for each episode here!

You can see the first phase here.

Episode 5: Repeats & Loops

The code for this episode is available here.

Loops are such an essential part of programming that I knew I’d have to make an episode on them at some point. A natural musical analogue is musical repeats, so the whole episode came fairly naturally!

I thought it’d be fun to have some beats to accompany the piano, so I used SuperCollider for that. That proved to be the most challenging part of the episode, as getting the timing right was really hard. A big part of the difficulty is that there’s a mechanical latecy induced by the piano, since the hammers have to physically strike the strings! In the end I’m pleased enough with the output, although I think I could have done better…

Tips for Reviewing Research Papers

The NeurIPS 2021 review period is about to begin, and there will likely be lots of complaining about the quality of reviews when they come out (I’m often guilty of this type of complaint).

I decided to write a post describing how I approach paper-reviewing, in the help that it can be useful for others (especially those who are new to reviewing) in writing high quality reviews.

I’m mostly an RL researcher, so a lot of the tips below are mostly from my experience reading RL papers. I think many of the ideas are applicable more generally, but I acknowledge some may be more RL-specific.

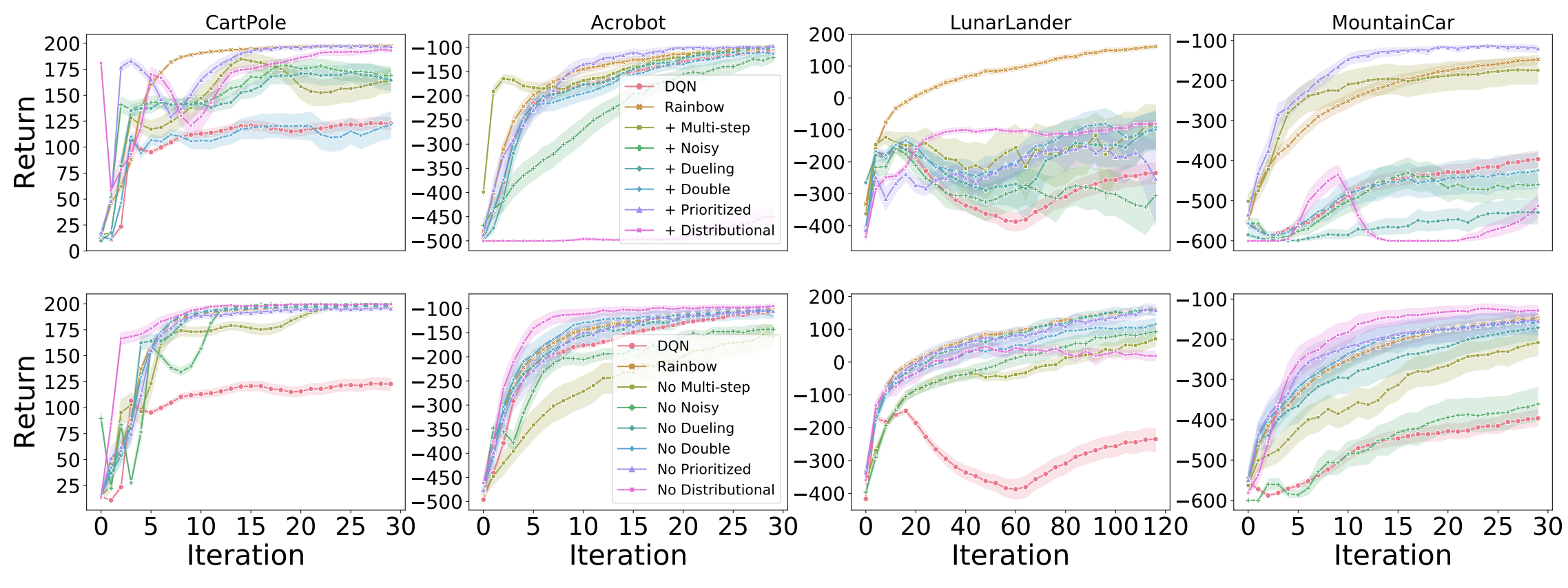

Revisiting Rainbow: Promoting more insightful and inclusive deep reinforcement learning research

We argue for the value of small- to mid-scale environments in deep RL for increasing scientific insight and help make our community more inclusive.

Johan S. Obando-Ceron and Pablo Samuel Castro

This is a summary of our paper which was accepted at the Thirty-eighth International Conference on Machine Learning (ICML'21). (An initial version was presented at the deep reinforcement learning workshop at NeurIPS 2020).

The code is available here.

You can see the Deep RL talk here.

Episode 4: Live Coding & Jazz

The code for this episode is available here.

I had a different idea for the fourth episode, but then I saw John McLaughlin’s tweet about International Jazz day, and decided to do something for that instead.

Obviously I’d talk about Jazz in the musical section, but it wasn’t clear yet what part of Jazz I’d talk about. I spoke to a few people and it seemed like a good idea would be to talk about improvisation, and how jazz musicians do it; in particular, I’m hoping this helps people who don’t “get” jazz to understand what we’re doing when we play it, and that we’re not just playing random notes! :)

Episode 3: Leitmotifs & Variables

The code for this episode is available here.

I had it in my head that the third episode would talk about variables in the section about Computer Science. Originally I thought the musical would be about chords, but it didn’t quite fit well with variables. Then I thought about key signatures, thinking that these are kind of like variables in the sense that you can shift any song into different pitches just by changing key signatures; but again, I wasn’t very content with the connection. While walking Lucy (my dog) one day it hit me that leitmotifs are actually quite similar to variables in the sense that you can reference them at any point, and they hold a particular “value” when called.

Episode 2: Bits & Semitones

The code for this episode is available here.

The idea for doing something with bits seemed kind of natural to me as a second episode. After covering what “computation” is, why not cover what computers actually “see” when they run computations?

Given that bits are what makes up everything inside a computer’s software, I wanted a musical topic that was inside every type of music (at least in Western music). Initially I was thinking of doing scales, but as I was developing this idea it dawned on me that there is a very close relationship between semitones and tones (or between half-steps and whole-steps) and the zeros and ones of the binary system.